Protein binding prediction

Implementation of Capsule Networks for predicting MHC-peptide binding

Problem description

This project presents the implementation of Capsule Networks (CapsNet) for predicting MHC-peptide binding hit. The study covers dataset analysis, methodological explanation, experimental setup, and result evaluation. The advantages of CapsNet are explored through several experiments. This study is the implementation of CapsNet-MHS paper.

CapsNet has been proposed as an alternative to traditional Convolutional Neural Networks (CNNs) to better capture spatial hierarchies. This project aims to implement CapsNet for MHC-peptide binding prediction, evaluating its performance against conventional deep learning models.

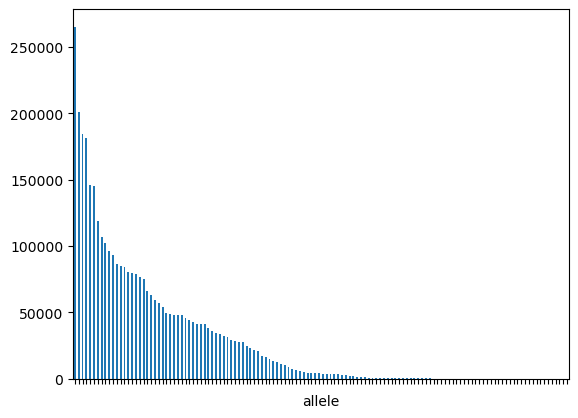

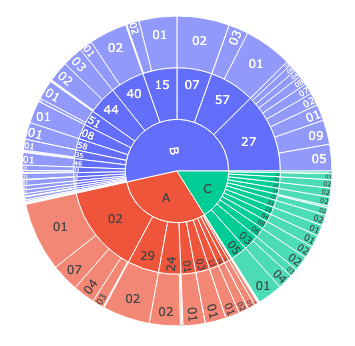

Dataset Analysis

The dataset utilized is the NetMHC dataset. It comprises over 3.6 million peptide-allele pairs labeled for MHC class I binding. The distribution of alleles is heavily imbalanced.

Challenges

- Extreme class imbalance (94.6% non-binding, 5.4% binding)

- Imbalanced allele frequency and presence of unseen alleles in test set

- Need for robust embeddings (e.g., using BLOSUM)

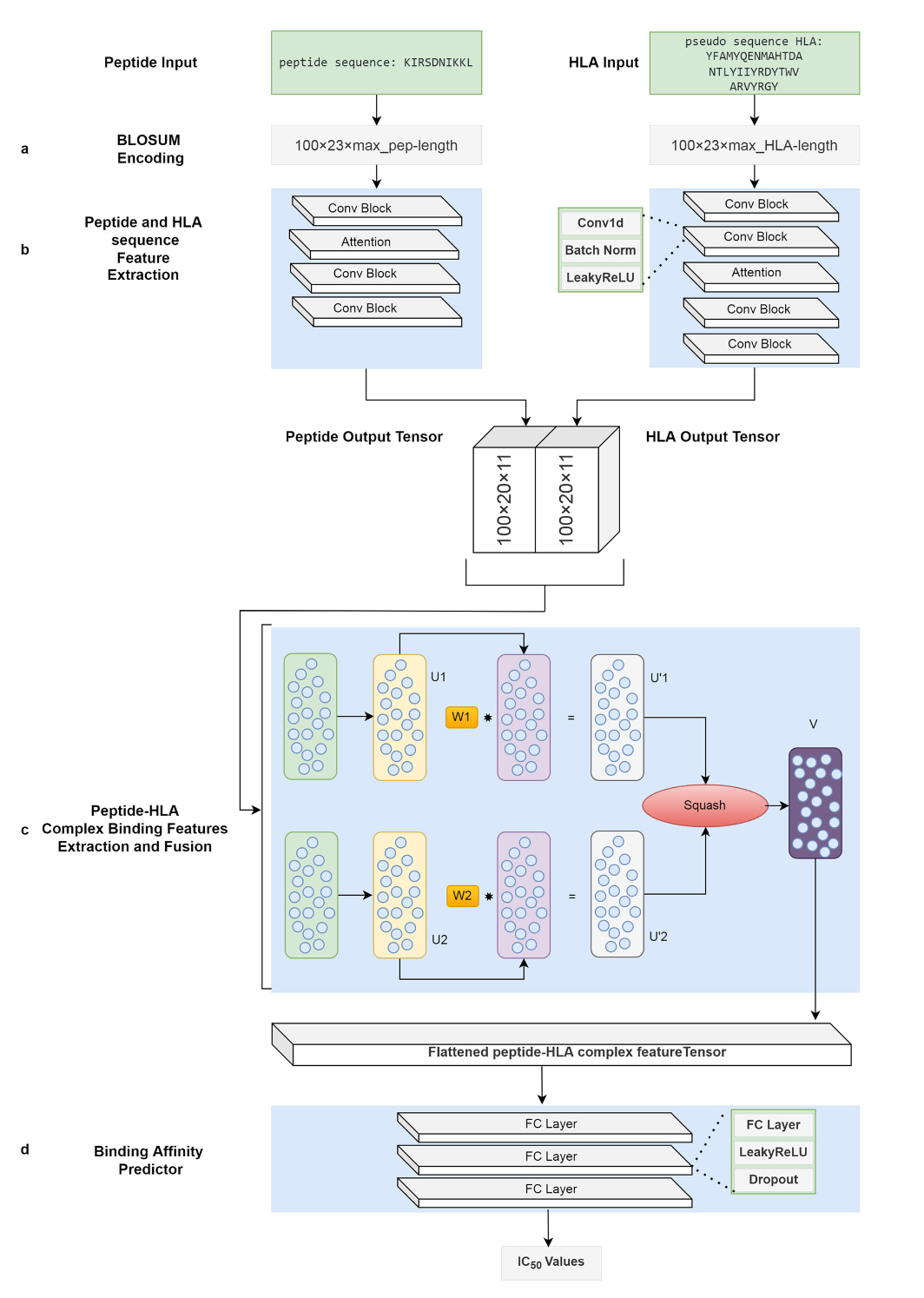

Network Architecture

How did I do it

Capsule Networks represent local features using vectors instead of scalars. Each vector’s norm indicates probability, while the direction captures spatial relationships. Inputs $u_i$ are transformed via matrices $W_{ij}$, producing \(\hat{u}_{j \mid i} = W_{ij} u_i\). Outputs are computed as:

\[s_j = \sum_i c_{ij} \hat{u}_{j|i}\]and the final capsule output is:

\[v_j = \frac{||s_j||^2}{1 + ||s_j||^2} \cdot \frac{s_j}{||s_j||}\]Implementation Details

- Sigmoid output for binary classification

- Binary Cross Entropy (BCE) loss used

- Alleles represented using amino acid sequences

- Input features encoded using BLOSUM matrices

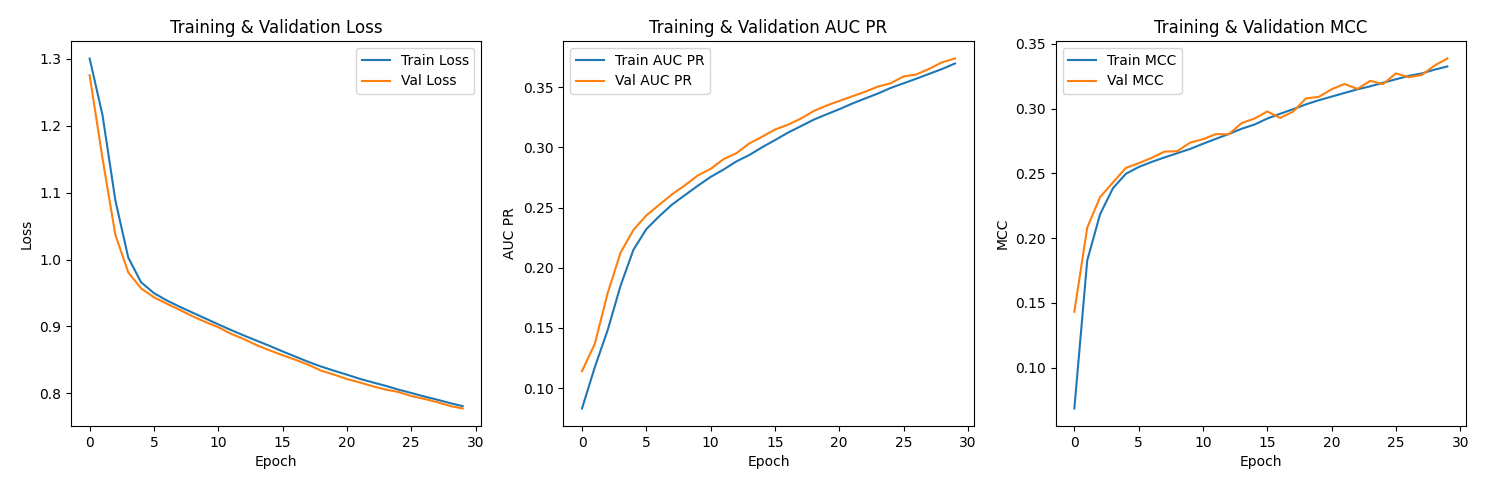

Training

The model was trained using the Adam optimizer with a learning rate of $10^{-6}$. Although the learning rate might appear small, the batch size used is rather big. The training setup included:

- BLOSUM45, 62, and 80 embeddings compared

- Trained for 10 epochs initially, then retrained on the whole training set with BLOSUM62 for 30 epochs

- Used weighted BCE to compensate for the imbalance

- Used

vast.aifor training due to computational cost - Batch size: 512 (train), 1024 (validation)

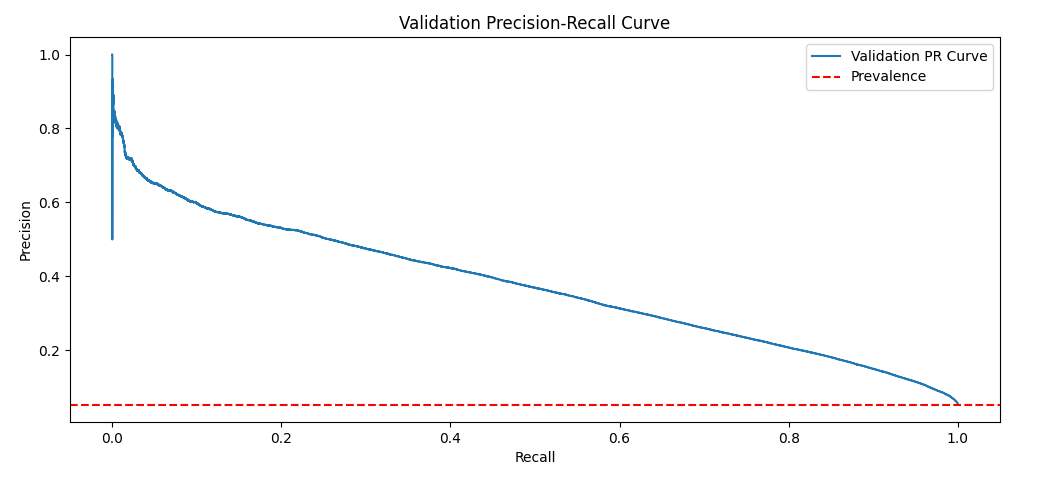

- Evaluating with AUC PR, since properly classifying the positive class is more important than being able to distinguish between the classes, which is modeled by AUC ROC

Results

| BLOSUM Matrix | AUC-ROC |

|---|---|

| BLOSUM45 | 0.8407 |

| BLOSUM62 | 0.8375 |

| BLOSUM80 | 0.8407 |

Table 1: AUC-ROC values on validation set

| Metric | Value |

|---|---|

| AUC PR | 0.370 |

| MCC | 0.333 |

| Accuracy | 0.798 |

| F1-Score | 0.306 |

Table 2: Test set performance metrics for the final model trained for 30 epochs.

| Metric | Value |

|---|---|

| AUC PR | 0.374 |

| MCC | 0.339 |

| Accuracy | 0.805 |

| F1-Score | 0.314 |

Table 3: Validation set performance metrics for the final model trained for 30 epochs.

Discussion

CapsNet-MHC showed strong results on imbalanced data. An alternative NLP-based approach using fastText-like embeddings was tested but was computationally prohibitive due to massive training pairs.

Conclusion

Capsule Networks effectively capture peptide-allele interactions. While promising, further tuning and training on all folds is necessary to realize full potential. Future efforts should focus on hyperparameter tuning, reconsidering the attention approach and deeper analysis of capsule outputs for the purpose of explainability.

References

2023

- CapsNet-MHC predicts peptide-MHC class I binding based on capsule neural networksCommunications biology, 07–09 jul 2023